It must have been love

This is the codename for my latest Hyper-V Packer Virtual Machine templates release.

When I dropped Hyper-V in favor of Proxmox, I intended to close this repository. I feared stealing my family time and the rising costs of running needed infrastructure and pipelines.

The former was resolved by writing and mainly testing in the dawn time (as I am a definitely morning person). Quiet hours before meetings, obligations, and neverending litanies of ‚Could you help me?’

The latter is resolved by running the BuyMeACoffee campaign – a link you can find in Readme.

Long story short – the newest release of Hyper-V templates

## Version 3.0.0 2023-09-16

* [BREAKING_CHANGE] complete redesign of building process. Instead od separate scripts now you’re presented with generic `hv_generic.ps1` script run with proper parameters. This will allow for easier maintenance and less clutter in repository

* [BREAKING_CHANGE] complete redesign of `extra` folder structure. Instead of primary structure based on hypervisor, current structure focuses on OS type, then if needed on hypervisor or cloud model. This will allow for easier maintenance and less clutter in repository

* [BREAKING_CHANGE] complete redesign of Windows Update process. Instead of Nuget-based module, now we’re presented with packer `windows-update` plugin. This will allow for easier maintenance and less clutter in repository

* [BREAKING_CHANGE] complete redesing of templates. Instead of separate files for each template, now we’re presented with generic family template (windows/rhel/ubuntu) This will allow for easier maintenance and less clutter in repository. Since packer doesn’t support conditions, we’re using `empty` resources to change its flow.

* [BREAKING_CHANGE] dropping support for Vagrant builds. This can be introduced later, but for now, these builds are removed.

* [BREAKING_CHANGE] dropping support for extra volumes for Docker. This is related both with removal of Docker from Kubernetes in favor of containerd, which forces different paths for containers.

* [BREAKING_CHANGE] dropping customization image tendencies. We’ll try to generate images as much generic as possible, without ground changes in them. Non-generic changes like adding Zabbix or Puppet will be removed in next releases, as I do believe this is not the `Packer’s` role.

* [BREAKING_CHANGE] dropping support for CentOS 7.x

* [BREAKING_CHANGE] dropping support for Windows Server 2016

Vagrant images for Apple M1 Macbooks

New images are available for the arm64 M1 platform:

- AlmaLinux 9.0 arm64

- Ubuntu 22.04 arm64

https://app.vagrantup.com/marcinbojko/

https://github.com/marcinbojko/vagrant-boxes

Enjoy!

Debug Kubernetes pods with Lens or Rancher

During work with Kubernetes, it may be required to debug pod life or pod’s network connectivity. I know several people who tirelessly would add extra packages to every debugged pod, but I assure you – there is a better way, especially when you’re using Rancher or Lens (or both).

Today’s part – debugging sidecar in Lens.

As a first step, make sure your Kubernetes supports ephemeral containers: https://www.shogan.co.uk/kubernetes/enabling-and-using-ephemeral-containers-on-kubernetes-1-16/

The second step – download and add an extension to your Lens:

https://github.com/pashevskii/debug-pods-lens-extension

Third step: add a required image to your list. You can choose from predefined images like:

busybox

markeijserman/debug

pragma/network-multitool

I’d recommend also an excellent swiss-army-knife: https://hub.docker.com/r/leodotcloud/swiss-army-knife

and image maintained by me:

https://hub.docker.com/repository/docker/marcinbojko/alpine-tools

available in Github: https://github.com/marcinbojko/alpine-tools

After installing an extension go to Preferences/Extensions and add a new image.

To attach a debug pod, go to any pod you desire, choose ‚Run as debug’ icon,

choose an image, and … have a fun!

Since I got my hands on Macbook …

I am not a fan of Apple Reservation 😉 But, when you’re in Rome …. blah blah

Fortinet NSE 1-3 Certificates

Definitely not the sharpest tool in the shed – but still worth a shot. Maybe for network people, anything below NSE 4 is laughable, but for me, since I needed more overview and less „Wikipedia approach” on ‚show config’, these are quite valuable.

These certs are available without any fees in Fortinet NSE Institute – https://training.fortinet.com/ and certainly will enrich global knowledge about products, threats, and their countermeasures.

Oracle Linux 8 support in the vagrant-boxes repository.

Welcome Oracle Linux next to CentOS/AlmaLinux/RockyLinux supported by me 😉

https://github.com/marcinbojko/vagrant-boxes

more info about roadmap:

https://github.com/marcinbojko/hv-packer/projects/2

Vagrant boxes for everyone

Since I learned about Vagrant, I am using it in day-to-day operations – they are incredibly handy in cases when you need to test or prepare something for non-prod usage.

For several years, I was collecting different boxes for personal and a company’s usage. Slowly, it’s time to organize them and release to the wild.

Feel free to use them, via this repository: https://github.com/marcinbojko/vagrant-boxes or simply by visiting my profile at Vagrant Cloud: https://app.vagrantup.com/marcinbojko

Learning Python #1 – Prometheus exporter for The Foreman

The need

The Foreman is a nice open-source ENC (External Node Classifier) for multiple executors (Ansible, Puppet, Chef, Saltstack) and it’s slowly going to be executor-agnostic: https://www.theforeman.org/

Since internal prometheus client exposes only data about application itself, I’ve found useful to also expose statuses and labels of managed hosts into Prometheus, thus foreman-exporter was born: https://github.com/marcinbojko/foreman_exporter

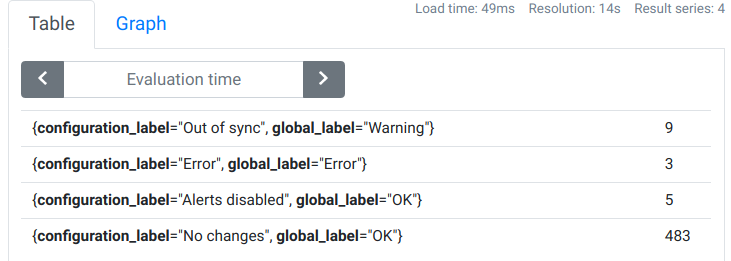

Queries

Let’s try our query about hosts statuses:

count(foreman_exporter_hosts) by (global_label, configuration_label)

Adding operating system to the tables

count(foreman_exporter_hosts) by (global_label, configuration_label,operatingsystem)

Or just these with not „OK” statuses, divided by operating system

count(foreman_exporter_hosts {global_label!="OK"}) by (operatingsystem)

Catching logs – Graylog is a good place to start.

During my talks with multiple clients, there always was a lot of jam about ‚Observability‚ buzzword, mostly followed by ‚prometheus’, ‚grafana’, ‚sensu’, ‚datadog’ tags. However the statement above is quite right, the second question asked by me was ‚what about logs’. Here answers were not so unanimous. So we could get:

- What about them?

- Yes, we have them, on hosts

- We don’t care about logs, only (prometheus/sensu/grafana/metrics) matters!

- We’re sending them to some Linux host via Syslog and we can browse them later

Sounds familiar? Yup.

More advanced answers were dancing around ELK stack, some of them mentioned cloud-native solutions like Google’s StackDriver, Amazon CloudWatch Logs, Azure Monitor.

For simples cases (regarding: on-premise or cloud-based) we can use a smaller setup of Graylog – https://www.graylog.org/

It can be used as Enterprise Licensed stack for up to 5 GB of data per day. You can have advanced analytics, archiving (long term – as long as you please), alerts & event management, all in one box.

Sure, for production usage it’s recommended to rebuild this setup, having more than 1 node, but for simpler and non-performance-greedy usage this can be a good start.

You can deliver logs (WindowsLogs, TextFile logs, and AuditLogs using proper Beats – https://www.elastic.co/beats/)

- Filebeat (Text logs) – https://www.elastic.co/beats/filebeat

- WinLogBeats (Windows Event Viewer) – https://www.elastic.co/downloads/beats/winlogbeat

- Auditbeats – https://www.elastic.co/beats/auditbeat

As a simple starter you can try using this setup – available as Traefik proxied setup:

Traefik 2.2 + docker-compose – easy start.

Traefik (https://containo.us/traefik/) is a cloud-native router (or load-balancer) in our case. From the beginning it offers very easy integration with docker and docker-compose – just using simple objects like labels, instead of bulky and static configuration files.

So, why to use it?

- cloud-ready (k8s/docker) support

- easy configuration, separated on a static and dynamic part. Dynamic part can (as the name suggests) change dynamically and Traefik is first to react and adjust.

- support for modern and intermediate cipher suites (TLS)

- support for HTTP(S) Layer7 load balance, as well as TCP and UDP (Layer 4)

- out of the box support for Let’s Encrypt – no need to reuse and worry about certbot

- out of the box prometheus metrics support

- docker/k8s friendly

In the attached example we’re going to use it to create a simple template (static traefik configuration) + dynamic, docker related config, which can be reused to any of your docker/docker-compose/swarm deployments.

Full example:

https://github.com/marcinbojko/docker101/tree/master/10-traefik22-grafana

traefik.yaml

global:

checkNewVersion: false

log:

level: DEBUG

filePath: "/var/log/traefik/debug.log"

format: json

accessLog:

filePath: "/var/log/traefik/access.log"

format: json

defaultEntryPoints:

- http

- https

api:

dashboard: true

ping: {}

providers:

docker:

endpoint: "unix:///var/run/docker.sock"

file:

filename: ./traefik.yml

watch: true

entryPoints:

http:

address: ":80"

http:

redirections:

entryPoint:

to: https

scheme: https

https:

address: ":443"

metrics:

address: ":8082"

tls:

certificates:

- certFile: "/ssl/grafana.test-sp.develop.cert"

keyFile: "/ssl/grafana.test-sp.develop.key"

stores:

default:

defaultCertificate:

certFile: "/ssl/grafana.test-sp.develop.cert"

keyFile: "/ssl/grafana.test-sp.develop.key"

options:

default:

minVersion: VersionTLS12

cipherSuites:

- TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384

- TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

- TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256

- TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

- TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305

- TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305

sniStrict: true

metrics:

prometheus:

buckets:

- 0.1

- 0.3

- 1.2

- 5

entryPoint: metricsIn attached example we have basic configuration reacting on port 80 and 443, doing automatic redirection from 80 to 443, enabling modern cipher suites with HSTS.

Sp, how to attach and inform docker container to a configuration?

docker-compose

version: "3.7"

services:

traefik:

image: traefik:${TRAEFIK_TAG}

restart: unless-stopped

ports:

- "80:80"

- "443:443"

- "8082:8082"

networks:

- front

- back

volumes:

- /etc/localtime:/etc/localtime:ro

- /var/run/docker.sock:/var/run/docker.sock:ro

- ./traefik/etc/traefik.yml:/traefik.yml

- ./traefik/ssl:/ssl

- traefik_logs:/var/log/traefik

labels:

- "traefik.enable=true"

- "traefik.http.routers.traefik-secure.entrypoints=https"

- "traefik.http.routers.traefik-secure.rule=Host(`$TRAEFIK_HOSTNAME`, `localhost`)"

- "traefik.http.routers.traefik-secure.tls=true"

- "traefik.http.routers.traefik-secure.service=api@internal"

- "traefik.http.services.traefik.loadbalancer.server.port=8080"

grafana-xxl:

restart: unless-stopped

image: monitoringartist/grafana-xxl:${GRAFANA_TAG}

expose:

- "3000"

volumes:

- grafana_lib:/var/lib/grafana

- grafana_log:/var/log/grafana

- grafana_etc:/etc/grafana

- ./grafana/provisioning:/usr/share/grafana/conf/provisioning

networks:

- back

depends_on:

- traefik

labels:

- "traefik.enable=true"

- "traefik.http.routers.grafana-xxl-secure.entrypoints=https"

- "traefik.http.routers.grafana-xxl-secure.rule=Host(`${GRAFANA_HOSTNAME}`,`*`)"

- "traefik.http.routers.grafana-xxl-secure.tls=true"

- "traefik.http.routers.grafana-xxl-secure.service=grafana-xxl"

- "traefik.http.services.grafana-xxl.loadbalancer.server.port=3000"

- "traefik.http.services.grafana-xxl.loadbalancer.healthcheck.path=/"

- "traefik.http.services.grafana-xxl.loadbalancer.healthcheck.interval=10s"

- "traefik.http.services.grafana-xxl.loadbalancer.healthcheck.timeout=5s"

env_file: ./grafana/grafana.env

volumes:

traefik_logs: {}

traefik_acme: {}

grafana_lib: {}

grafana_log: {}

grafana_etc: {}

networks:

front:

ipam:

config:

- subnet: 172.16.227.0/24

back:

ipam:

config:

- subnet: 172.16.226.0/24Full example with Let’s Encrypt support:

https://github.com/marcinbojko/docker101/tree/master/11-traefik22-grafana-letsencrypt

Have fun!

That feeling

when after 6 month long work you’re going live with K8S 😉

Veeam Instant Recovery – when life is too short to wait for your restore to complete.

„Doing backups is a must- restoring backups is a hell”

So how can we make this ‚unpleasant’ part more civilised? Let’s say – our VM is a couple hundred GB in size, maybe even a couple TB and for some reason, we have to do some magic: restore, migrate, transfer – whatever is desired a the moment.

But in oppositon to our will – physics is quite unforgiving in that matter – restoring takes time. Even if we have bunch of speed-friendly SSD arrays, 10G/40G network at our disposal, still few hours without their favourite data can be ‚no-go’ for our friends from „bussiness side”.

In this particular case, Veeam Instant Recovery comes into rescue.

How does it works?

It uses quite interesting quirk – in reality, the time you need for having your VM ready is to restore small, kb-sized VM configuration, create some sparse files for drives, and mount the drives itself over a network. This way, within 3-5 minutes your machine is up and ready

But, disks, you say, disks! „Where are my data, my precious data?”.

They are still safe, protected in your backup repository, mounted here as ReadOnly volumes.

So, after an initial deploy our VM is ready to serve requests. Also in Veeam B&R Console tasks is waiting for you to choose: begin migration from repositories into real server, or just to power it off.

Phase 1 during restore:

- IO Read is served from mounted (RO) disk from backup repisotory

- IO Write from client is server into new Drive snapshot (or written directly into sparse Disk_1 file.

- In background, data from backup repository are being moved into Disk_1_Sparse file.

Phase 2 to begins when there are no data left to move from backup repository – this initiates merging phase, when ‚snapshot’ (CHANGED DATA) are being merged with originally restored DISK_1.

As with everything, there are few PROS and CONS

PROS:

- machine is available withing a few minutes, regardles of its size

- during InstantRecovery restore, VM is alive and can (slowly) process all requests

CONS

- restoring can take a little longer then real ‚offline’ restore

- space needed during restore proces can be as much as twice your VM size. If you’ll ran out of space during restore, process will fail and you’ll loose all new data.

- your data are served with some delay – to read a needed block, VM have to reach it from repository, which means on-the-fly deduplication and decompression

- if your VM is under heavy use, especially on IO Write, restoring can take much longer then anticipated, as there will be no IO left to serve read and transfer from original disk.

- if your restore will fail from ANY reason – your data are inconsistent. You’ll have to repeat this process from point 0 – any data changed in previous restore attempt, will be discarded.

So which targets should be ideal for this process?

Any VM which doesn’t change data much but needs to be restored within few minutes:

- frontend servers

Any VM which offers small-to-medium usage of IO Read , not so many IO Write

- fileservers

Which targets should we avoid?

- database servers

- cluster role members/nodes